Understanding Stacks: Advanced Optimization

Deep dive into stack memory layout, call stacks, recursion limits, CPU cache performance, and when to choose stacks over alternatives.

Deep dive into stack memory layout, call stacks, recursion limits, CPU cache performance, and when to choose stacks over alternatives.

Author

Mr. Oz

Date

Read

12 mins

Level 3

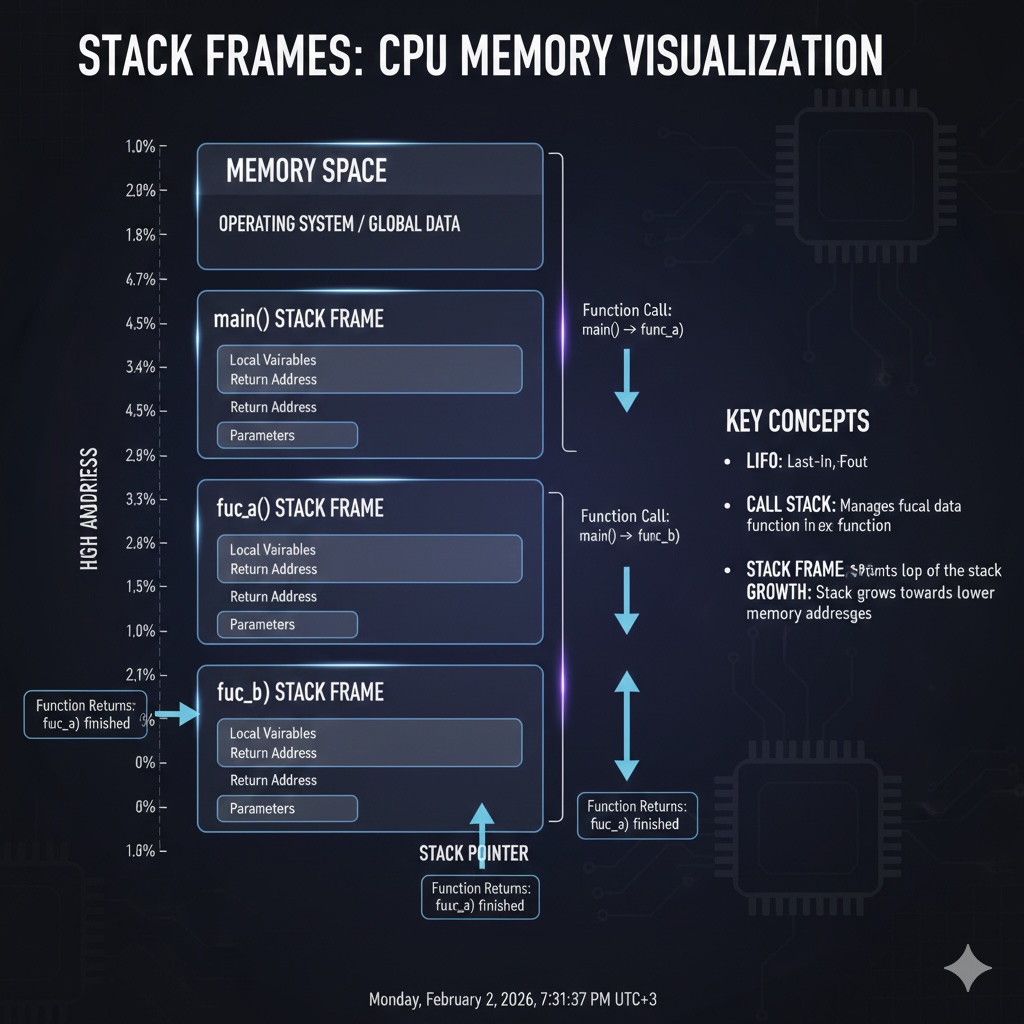

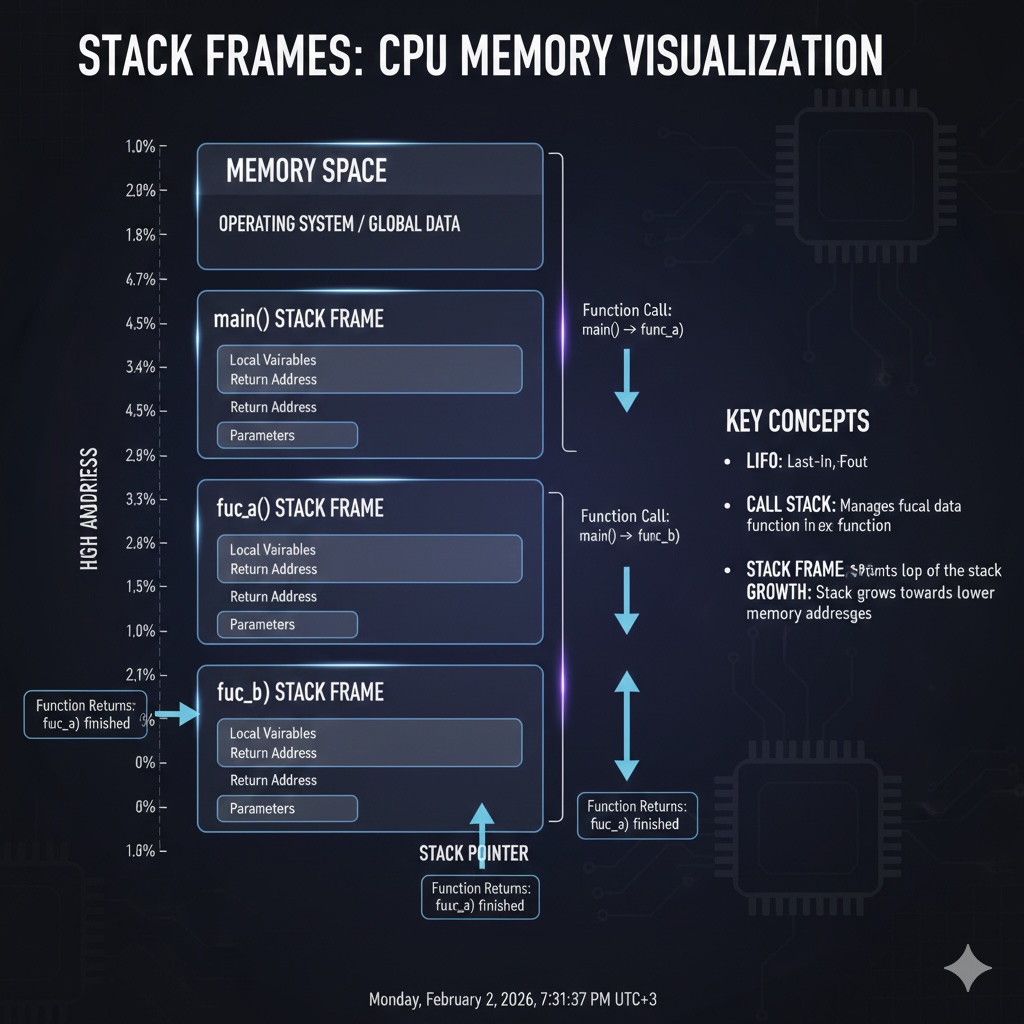

Every running program has a call stack that tracks function calls. It's a real stack in memory!

def func_a():

x = 1

func_b()

# Stack frame for func_a is created here

# Contains: local variable x, return address

def func_b():

y = 2

func_c()

# Stack frame for func_b pushed on top

# Contains: local variable y, return address

def func_c():

z = 3

# Stack frame for func_c on top

# When func_c returns, its frame is popped

func_a() # Main starts hereVisual representation (high addresses at top):

The call stack has a limited size (typically 1-8 MB). Exceeding it causes a stack overflow.

# DANGER: Infinite recursion causes stack overflow

def infinite_recursion():

return infinite_recursion() # Each call adds a frame

# This will crash with: RecursionError: maximum recursion depth exceeded

infinite_recursion()Common causes of stack overflow:

Production tip: For deep recursion, convert to iterative approach using an explicit stack, or increase stack size with compiler flags.

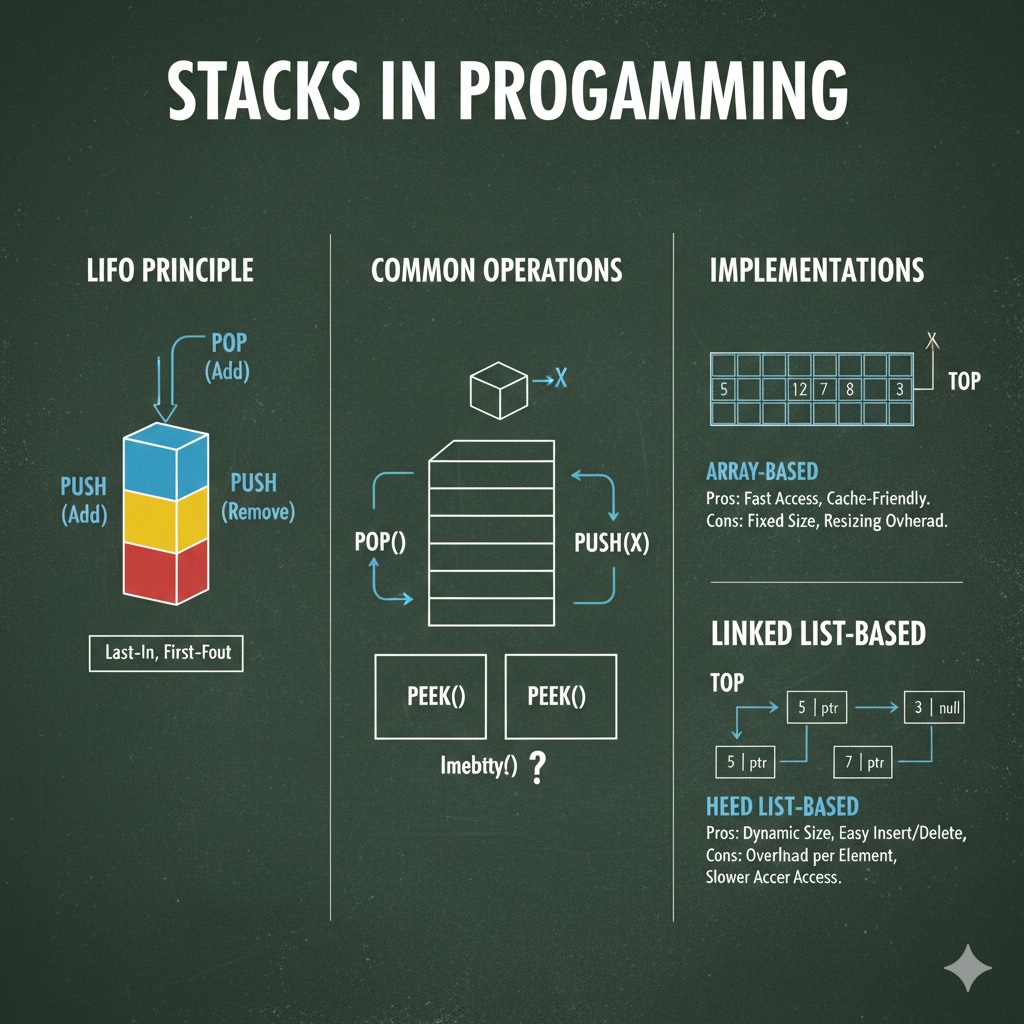

Array-based stacks are cache-friendly because elements are contiguous in memory.

Benchmark results (1 million push/pop operations):

| Implementation | Time (ms) | Cache Misses |

|---|---|---|

| Array (Python list) | ~45 ms | Low |

| Linked List | ~120 ms | High (pointer chasing) |

| collections.deque | ~35 ms | Low (optimized C implementation) |

Understanding the difference is crucial for systems programming:

| Aspect | Stack | Heap |

|---|---|---|

| Allocation speed | Very fast (just move SP) | Slower (search for free block) |

| Deallocation | Automatic (function return) | Manual (free/delete/garbage collection) |

| Size limit | Small (MBs) | Large (GBs) |

| Use case | Local variables, function calls | Dynamic data, large objects |

| Fragmentation | Never | Can happen |

1. Compilers & Interpreters

Function calls, expression evaluation, scope management

2. Web Browsers

Back/forward navigation, undo history, DOM traversal

3. Text Editors

Undo/redo, parenthesis matching in code editors

4. Network Routers

Packet processing, protocol layering

5. Game Engines

State machines, scene management, event handling

Level 1

Learn the fundamentals of stacks through an engaging pancake stack analogy.

Author

Mr. Oz

Duration

5 mins

Level 2

Implementation details, array vs linked list stacks, and common operations.

Author

Mr. Oz

Duration

8 mins

Level 3

Memory layout, call stacks, recursion, and performance considerations.

Author

Mr. Oz

Duration

12 mins